Publications

Publications in reversed chronological order.

*Authors contributed equally

2025

- Under ReviewEnergy-Efficient Autonomous Aerial Navigation with Dynamic Vision Sensors: A Physics-Guided Neuromorphic ApproachSourav Sanyal*, Amogh Joshi*, Manish Nagaraj, Rohan Kumar Manna, and Kaushik RoyIn , 2025

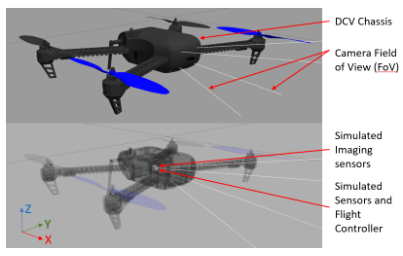

Vision-based object tracking is a critical component for achieving autonomous aerial navigation, particularly for obstacle avoidance. Neuromorphic Dynamic Vision Sensors (DVS) or event cameras, inspired by biological vision, offer a promising alternative to conventional frame-based cameras. These cameras can detect changes in intensity asynchronously, even in challenging lighting conditions, with a high dynamic range and resistance to motion blur. Spiking neural networks (SNNs) are increasingly used to process these event-based signals efficiently and asynchronously. Meanwhile, physics-based artificial intelligence (AI) provides a means to incorporate systemlevel knowledge into neural networks via physical modeling. This enhances robustness, energy efficiency, and provides symbolic explainability. In this work, we present a neuromorphic navigation framework for autonomous drone navigation. The focus is on detecting and navigating through moving gates while avoiding collisions. We use event cameras for detecting moving objects through a shallow SNN architecture in an unsupervised manner. This is combined with a lightweight energy-aware physics-guided neural network (PgNN) trained with depth inputs to predict optimal flight times, generating near-minimum energy paths. The system is implemented in the Gazebo simulator and integrates a sensor-fused visionto-planning neuro-symbolic framework built with the Robot Operating System (ROS) middleware. This work highlights the future potential of integrating event-based vision with physicsguided planning for energy-efficient autonomous navigation, particularly for low-latency decision-making.

@inproceedings{sanyal2025energyefficientautonomousaerialnavigation, title = {Energy-Efficient Autonomous Aerial Navigation with Dynamic Vision Sensors: A Physics-Guided Neuromorphic Approach}, author = {Sanyal, Sourav and Joshi, Amogh and Nagaraj, Manish and Manna, Rohan Kumar and Roy, Kaushik}, year = {2025}, eprint = {2502.05938}, archiveprefix = {arXiv}, primaryclass = {cs.RO}, url = {https://arxiv.org/abs/2502.05938}, doi = {10.48550/arXiv.2502.05938}, } -

SHIRE: Enhancing Sample Efficiency using Human Intuition in REinforcement LearningAmogh Joshi, Adarsh Kosta, and Kaushik RoyIn 2025 IEEE International Conference on Robotics and Automation (ICRA), 2025

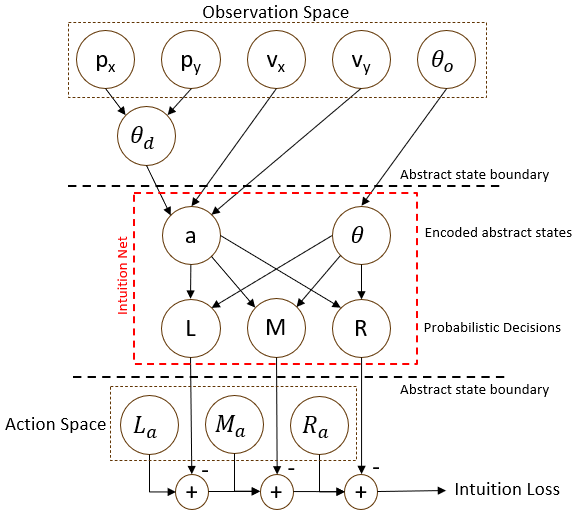

SHIRE: Enhancing Sample Efficiency using Human Intuition in REinforcement LearningAmogh Joshi, Adarsh Kosta, and Kaushik RoyIn 2025 IEEE International Conference on Robotics and Automation (ICRA), 2025The ability of neural networks to perform robotic perception and control tasks such as depth and optical flow estimation, simultaneous localization and mapping (SLAM), and automatic control has led to their widespread adoption in recent years. Deep Reinforcement Learning (DeepRL) has been used extensively in these settings, as it does not have the unsustainable training costs associated with supervised learning. However, DeepRL suffers from poor sample efficiency, i.e., it requires a large number of environmental interactions to converge to an acceptable solution. Modern RL algorithms such as Deep Q Learning and Soft Actor-Critic attempt to remedy this shortcoming but can not provide the explainability required in applications such as autonomous robotics. Humans intuitively understand the long-time-horizon sequential tasks common in robotics. Properly using such intuition can make RL policies more explainable while enhancing their sample efficiency. In this work, we propose SHIRE, a novel framework for encoding human intuition using Probabilistic Graphical Models (PGMs) and using it in the Deep RL training pipeline to enhance sample efficiency. Our framework achieves 25-78% sample efficiency gains across the environments we evaluate at negligible overhead cost. Additionally, by teaching RL agents the encoded elementary behavior, SHIRE enhances policy explainability. A real-world demonstration further highlights the efficacy of policies trained using our framework.

@inproceedings{joshi2025shire, author = {Joshi, Amogh and Kosta, Adarsh and Roy, Kaushik}, booktitle = {2025 IEEE International Conference on Robotics and Automation (ICRA)}, title = {SHIRE: Enhancing Sample Efficiency using Human Intuition in REinforcement Learning}, year = {2025}, volume = {}, number = {}, pages = {13399-13405}, keywords = {Training;Simultaneous localization and mapping;Costs;Q-learning;Supervised learning;Pipelines;Rapid prototyping;Probabilistic logic;Encoding;Robots}, url = {https://ieeexplore.ieee.org/document/11128459}, doi = {10.1109/ICRA55743.2025.11128459}, } -

Neuro-LIFT: A Neuromorphic, LLM-based Interactive Framework for Autonomous Drone FlighT at the EdgeAmogh Joshi*, Sourav Sanyal*, and Kaushik RoyIn , 2025

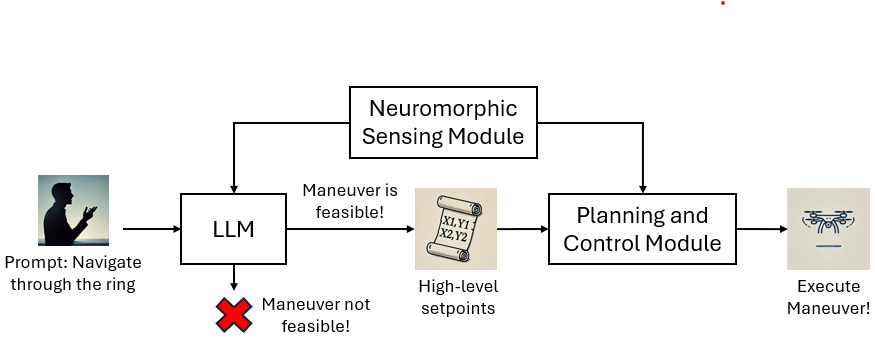

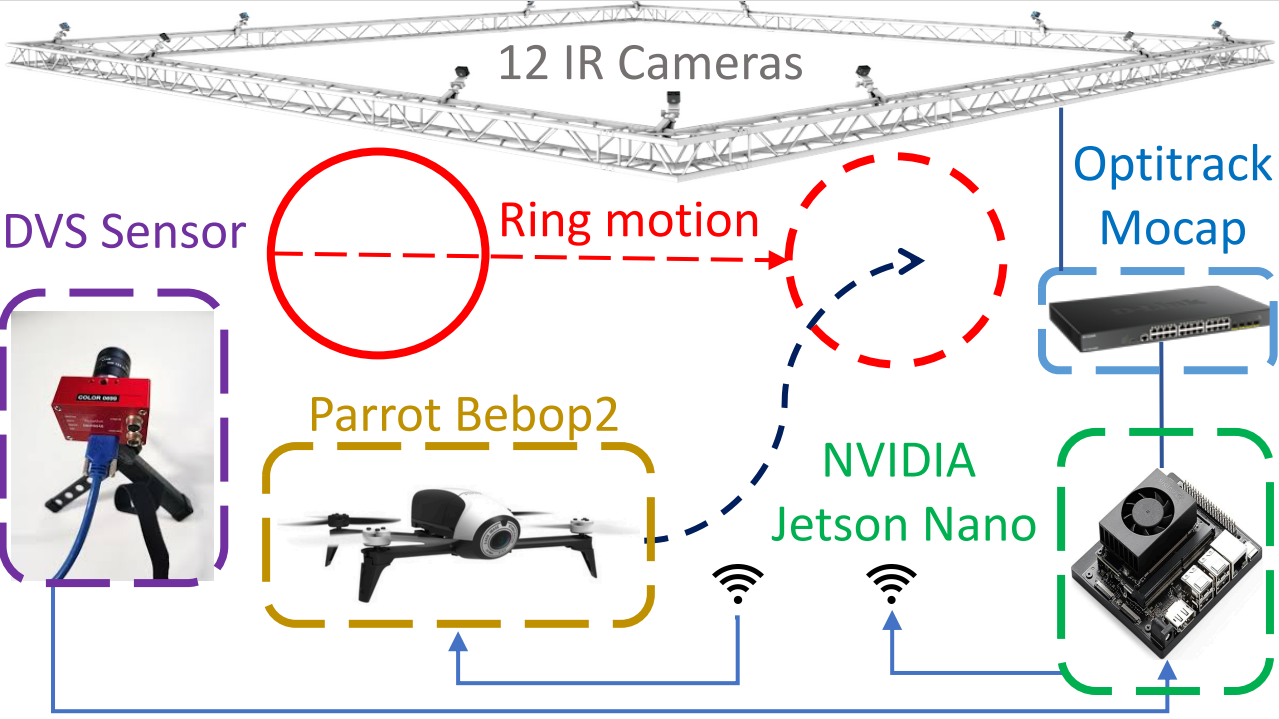

Neuro-LIFT: A Neuromorphic, LLM-based Interactive Framework for Autonomous Drone FlighT at the EdgeAmogh Joshi*, Sourav Sanyal*, and Kaushik RoyIn , 2025The integration of human-intuitive interactions into autonomous systems has been limited. Traditional Natural Language Processing (NLP) systems struggle with context and intent understanding, severely restricting human-robot interaction. Recent advancements in Large Language Models (LLMs) have transformed this dynamic, allowing for intuitive and highlevel communication through speech and text, and bridging the gap between human commands and robotic actions. Additionally, autonomous navigation has emerged as a central focus in robotics research, with artificial intelligence (AI) increasingly being leveraged to enhance these systems. However, existing AI-based navigation algorithms face significant challenges in latency-critical tasks where rapid decision-making is critical.Traditional frame-based vision systems, while effective for highlevel decision-making, suffer from high energy consumption and latency, limiting their applicability in real-time scenarios. Neuromorphic vision systems, combining event-based cameras and spiking neural networks (SNNs), offer a promising alternative by enabling energy-efficient, low-latency navigation. Despite their potential, real-world implementations of these systems, particularly on physical platforms such as drones, remain scarce. In this work, we present Neuro-LIFT, a realtime neuromorphic navigation framework implemented on a Parrot Bebop2 quadrotor. Leveraging an LLM for natural language processing, Neuro-LIFT translates human speech into high-level planning commands which are then autonomously executed using event-based neuromorphic vision and physicsdriven planning. Our framework demonstrates its capabilities in navigating in a dynamic environment, avoiding obstacles, and adapting to human instructions in real-time. Demonstration images of Neuro-LIFT navigating through a moving ring in an indoor setting is provided, showcasing the system’s interactive, collaborative potential in autonomous robotics

@inproceedings{joshi2025neuroliftneuromorphicllmbasedinteractive, title = {Neuro-LIFT: A Neuromorphic, LLM-based Interactive Framework for Autonomous Drone FlighT at the Edge}, author = {Joshi, Amogh and Sanyal, Sourav and Roy, Kaushik}, year = {2025}, eprint = {2501.19259}, archiveprefix = {arXiv}, primaryclass = {cs.RO}, url = {https://arxiv.org/abs/2501.19259}, doi = {10.48550/arXiv.2501.19259}, } -

Real-Time Neuromorphic Navigation: Guiding Physical Robots with Event-Based Sensing and Task-Specific Reconfigurable Autonomy StackSourav Sanyal*, Amogh Joshi*, Adarsh Kosta, and Kaushik RoyIn , 2025

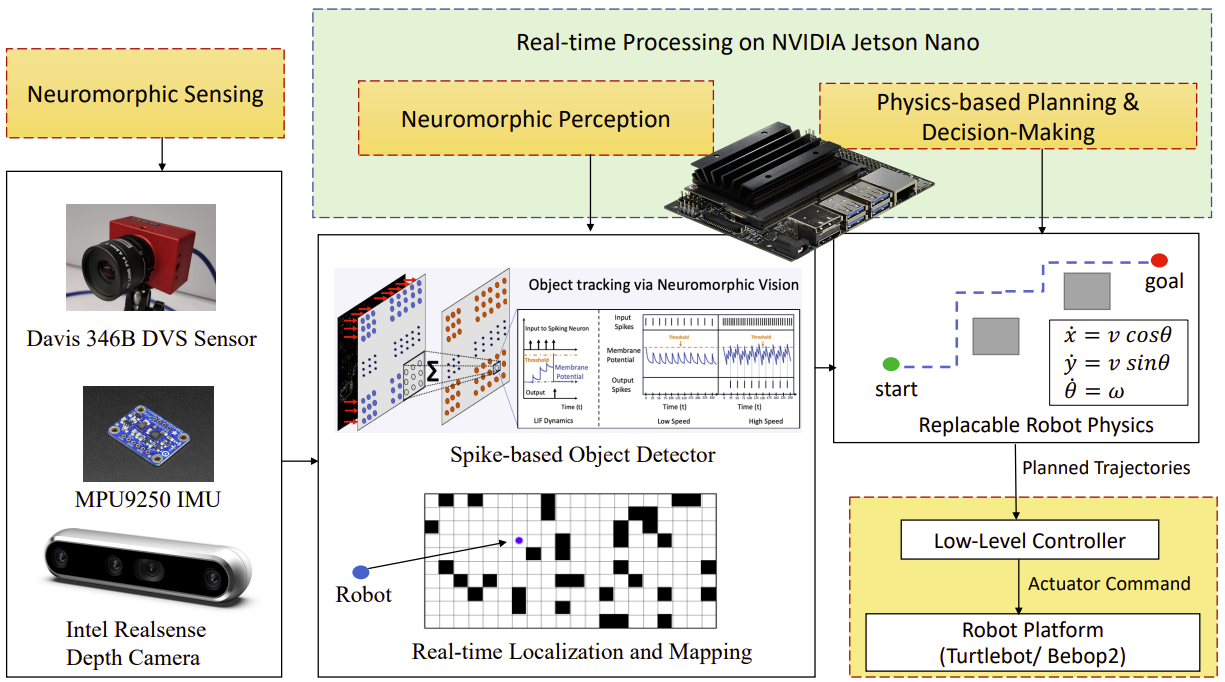

Real-Time Neuromorphic Navigation: Guiding Physical Robots with Event-Based Sensing and Task-Specific Reconfigurable Autonomy StackSourav Sanyal*, Amogh Joshi*, Adarsh Kosta, and Kaushik RoyIn , 2025Neuromorphic vision, inspired by biological neural systems, has recently gained significant attention for its potential in enhancing robotic autonomy. This paper presents a systematic exploration of a proposed Neuromorphic Navigation framework that uses event-based neuromorphic vision to enable efficient, real-time navigation in robotic systems. We discuss the core concepts of neuromorphic vision and navigation, highlighting their impact on improving robotic perception and decision-making. The proposed reconfigurable Neuromorphic Navigation framework adapts to the specific needs of both ground robots (Turtlebot) and aerial robots (Bebop2 quadrotor), addressing the task-specific design requirements (algorithms) for optimal performance across the autonomous navigation stack – Perception, Planning, and Control. We demonstrate the versatility and the effectiveness of the framework through two case studies: a Turtlebot performing local replanning for real-time navigation and a Bebop2 quadrotor navigating through moving gates. Our work provides a scalable approach to task-specific, real-time robot autonomy leveraging neuromorphic systems, paving the way for energy-efficient autonomous navigation.

@inproceedings{sanyal2025realtimeneuromorphicnavigationguiding, title = {Real-Time Neuromorphic Navigation: Guiding Physical Robots with Event-Based Sensing and Task-Specific Reconfigurable Autonomy Stack}, author = {Sanyal, Sourav and Joshi, Amogh and Kosta, Adarsh and Roy, Kaushik}, year = {2025}, eprint = {2503.09636}, archiveprefix = {arXiv}, primaryclass = {cs.RO}, url = {https://arxiv.org/abs/2503.09636}, doi = {10.48550/arXiv.2503.09636} } - Under ReviewTOFFE – Temporally-binned Object Flow from Events for High-speed and Energy-Efficient Object Detection and TrackingAdarsh Kumar Kosta, Amogh Joshi, Arjun Roy, Rohan Kumar Manna, Manish Nagaraj, and Kaushik RoyIn , 2025

Object detection and tracking is an essential perception task for enabling fully autonomous navigation in robotic systems. Edge robot systems such as small drones need to execute complex maneuvers at high-speeds with limited resources, which places strict constraints on the underlying algorithms and hardware. Traditionally, frame-based cameras are used for vision-based perception due to their rich spatial information and simplified synchronous sensing capabilities. However, obtaining detailed information across frames incurs high energy consumption and may not even be required. In addition, their low temporal resolution renders them ineffective in high-speed motion scenarios. Event-based cameras offer a biologically-inspired solution to this by capturing only changes in intensity levels at exceptionally high temporal resolution and low power consumption, making them ideal for high-speed motion scenarios. However, their asynchronous and sparse outputs are not natively suitable with conventional deep learning methods. In this work, we propose TOFFE, a lightweight hybrid framework for performing event-based object motion estimation (including pose, direction, and speed estimation), referred to as Object Flow. TOFFE integrates bio-inspired Spiking Neural Networks (SNNs) and conventional Analog Neural Networks (ANNs), to efficiently process events at high temporal resolutions while being simple to train. Additionally, we present a novel event-based synthetic dataset involving high-speed object motion to train TOFFE. Our experimental results show that TOFFE achieves 5.7x/8.3x reduction in energy consumption and 4.6x/5.8x reduction in latency on edge GPU(Jetson TX2)/hybrid hardware(Loihi-2 and Jetson TX2), compared to previous event-based object detection baselines.

@inproceedings{kosta2025toffetemporallybinnedobject, title = {TOFFE -- Temporally-binned Object Flow from Events for High-speed and Energy-Efficient Object Detection and Tracking}, author = {Kosta, Adarsh Kumar and Joshi, Amogh and Roy, Arjun and Manna, Rohan Kumar and Nagaraj, Manish and Roy, Kaushik}, year = {2025}, eprint = {2501.12482}, archiveprefix = {arXiv}, primaryclass = {cs.CV}, url = {https://arxiv.org/abs/2501.12482}, doi = {10.48550/arXiv.2501.12482} }

2024

-

Real-Time Neuromorphic Navigation: Integrating Event-Based Vision and Physics-Driven Planning on a Parrot Bebop2 QuadrotorAmogh Joshi, Sourav Sanyal, and Kaushik RoyIn 40th Anniversary of the IEEE International Conference on Robotics and Automation (ICRA@40), 2024

Real-Time Neuromorphic Navigation: Integrating Event-Based Vision and Physics-Driven Planning on a Parrot Bebop2 QuadrotorAmogh Joshi, Sourav Sanyal, and Kaushik RoyIn 40th Anniversary of the IEEE International Conference on Robotics and Automation (ICRA@40), 2024In autonomous aerial navigation, real-time and energy-efficient obstacle avoidance remains a significant challenge, especially in dynamic and complex indoor environments. This work presents a novel integration of neuromorphic event cameras with physics-driven planning algorithms implemented on a Parrot Bebop2 quadrotor. Neuromorphic event cameras, characterized by their high dynamic range and low latency, offer significant advantages over traditional frame-based systems, particularly in poor lighting conditions or during high-speed maneuvers. We use a DVS camera with a shallow Spiking Neural Network (SNN) for event-based object detection of a moving ring in real-time in an indoor lab. Further, we enhance drone control with physics-guided empirical knowledge inside a neural network training mechanism, to predict energy-efficient flight paths to fly through the moving ring. This integration results in a real-time, low-latency navigation system capable of dynamically responding to environmental changes while minimizing energy consumption. We detail our hardware setup, control loop, and modifications necessary for real-world applications, including the challenges of sensor integration without burdening the flight capabilities. Experimental results demonstrate the effectiveness of our approach in achieving robust, collision-free, and energy-efficient flight paths, showcasing the potential of neuromorphic vision and physics-driven planning in enhancing autonomous navigation systems.

@inproceedings{joshi2024realtimeneuromorphicnavigationintegrating, title = {Real-Time Neuromorphic Navigation: Integrating Event-Based Vision and Physics-Driven Planning on a Parrot Bebop2 Quadrotor}, author = {Joshi, Amogh and Sanyal, Sourav and Roy, Kaushik}, booktitle = {40th Anniversary of the IEEE International Conference on Robotics and Automation (ICRA@40)}, year = {2024}, eprint = {2407.00931}, archiveprefix = {arXiv}, primaryclass = {cs.RO}, url = {https://arxiv.org/abs/2407.00931}, doi = {10.48550/arXiv.2407.00931}, } -

FEDORA: A Flying Event Dataset fOr Reactive behAviorAmogh Joshi, Wachirawit Ponghiran, Adarsh Kosta, Manish Nagaraj, and Kaushik RoyIn 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024

FEDORA: A Flying Event Dataset fOr Reactive behAviorAmogh Joshi, Wachirawit Ponghiran, Adarsh Kosta, Manish Nagaraj, and Kaushik RoyIn 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024The ability of resource-constrained biological systems such as fruitflies to perform complex and high-speed maneuvers in cluttered environments has been one of the prime sources of inspiration for developing vision-based autonomous systems. To emulate this capability, the perception pipeline of such systems must integrate information cues from tasks including optical flow and depth estimation, object detection and tracking, and segmentation, among others. However, the conventional approach of employing slow, synchronous inputs from standard frame-based cameras constrains these perception capabilities, particularly during high-speed maneuvers. Recently, event-based sensors have emerged as low latency and low energy alternatives to standard frame-based cameras for capturing high-speed motion, effectively speeding up perception and hence navigation. For coherence, all the perception tasks must be trained on the same input data. However, present-day datasets are curated mainly for a single or a handful of tasks and are limited in the rate of the provided ground truths. To address these limitations, we present Flying Event Dataset fOr Reactive behAviour (FEDORA) - a fully synthetic dataset for perception tasks, with raw data from frame-based cameras, event-based cameras, and Inertial Measurement Units (IMU), along with ground truths for depth, pose, and optical flow at a rate much higher than existing datasets.

@inproceedings{joshi2024fedora, author = {Joshi, Amogh and Ponghiran, Wachirawit and Kosta, Adarsh and Nagaraj, Manish and Roy, Kaushik}, booktitle = {2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, title = {FEDORA: A Flying Event Dataset fOr Reactive behAvior}, year = {2024}, volume = {}, number = {}, pages = {5859-5866}, keywords = {Training;Accuracy;Event detection;Tracking;Computational modeling;Cameras;Optical flow;Standards;Streams;Synthetic data}, doi = {10.1109/IROS58592.2024.10801807}, url = {https://ieeexplore.ieee.org/document/10801807} }

2021

-

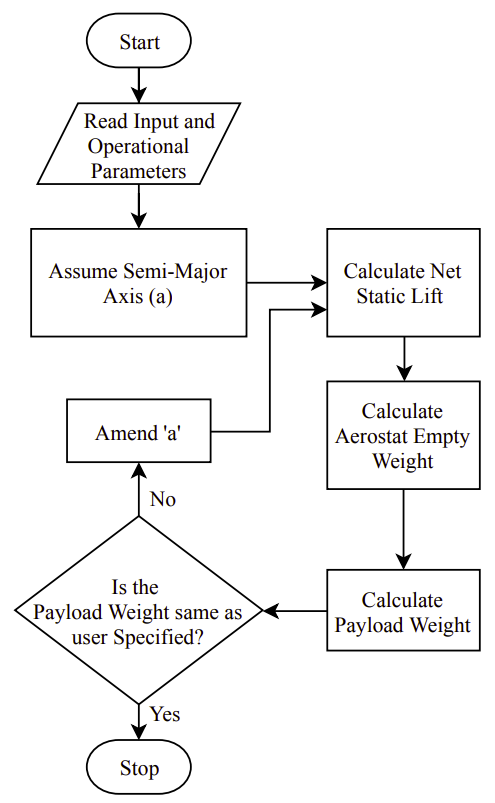

A Methodology for Sizing of a Mini-Aerostat SystemSaurabh V. Bagare, Amogh Joshi, and Rajkumar S Pant2021

A Methodology for Sizing of a Mini-Aerostat SystemSaurabh V. Bagare, Amogh Joshi, and Rajkumar S Pant2021View Video Presentation: https://doi.org/10.2514/6.2021-2986.vidThis paper describes a methodology for sizing and aerostatic lift determination of a relocatable mini-aerostat system. The system consists of an adequately sized spheroid double-layered envelope, below which a payload-carrying bay is mounted. The methodology can be used to determine the dimensions of the envelope to carry a given amount of payload while meeting all the user-specified operating requirements. A detailed scheme has been developed for the determination of net static and gross lift generated by the aerostat envelope as a function of operating requirements such as floating altitude, ambient temperature, pressure, superheat, superpressure, humidity, and type and purity of lifting gas. The methodology is used to size a mini-aerostat system for the provision of last-mile communication for first responders in a post-disaster scenario, or at remote locations. It is seen that as the payload weight increases from 50 to 250 N, the envelope volume nearly doubles in an almost linear fashion. The methodology has been coded in an applet in Simulink and Python for ease of use. As a demonstration of the capability of the methodology to investigate What-If scenarios, the change in size of Helium or Hydrogen filled envelope as a function to the payload and deployment location altitude has been determined, for Oblate and Prolate Spheroid shaped envelopes.

@article{doi:10.2514/6.2021-2986, author = {Bagare, Saurabh V. and Joshi, Amogh and Pant, Rajkumar S}, title = {A Methodology for Sizing of a Mini-Aerostat System}, booktitle = {AIAA AVIATION 2021 FORUM}, year = {2021}, chapter = {}, pages = {}, doi = {10.2514/6.2021-2986}, url = {https://arc.aiaa.org/doi/abs/10.2514/6.2021-2986} }